Dynamic Memory Manager Optimization

Introduction and related work

Many general-purpose memory allocators implemented for C and C++ provide good runtime and low memory usage for a wide range of applications Johnstone1999, Lea. Currently, the basis of an efficient DMM implementation in a general-context are already well established. A survey of dynamic storage allocation was published in 1995 by Wilson Wilson1995, and since then it has been considered one of the main references in DMM design. However, using specialized “Dynamic Memory Managers (DMMs)” that take advantage of application-specific behavior can dramatically improve application performance Barrett1993, Grunwald1993. In this regard, three out of the twelve integer benchmarks included in SPEC (parser , gcc , and vpr SPEC2010) and several server applications, use one or more custom DMMs Berger2001.

Current applications are developed using C++, where dynamic memory is allocated via the operator new() and deallocated by the operator delete(). Most compilers simply map these operators directly to the malloc() and free() functions of the standard C library.

On the one hand, studies have shown that dynamic memory management can consume up to 38% of the execution time in C++ applications Calder1995. Thus, the performance of dynamic memory management can have a substantial effect on the overall performance of C++ applications. On the other hand, new embedded devices must rely on dynamic memory for a very significant part of their functionality due to the inherent unpredictability of the input data. These devices also integrate multiple services such as multimedia and wireless network communications. It heavily influences the global memory usage of the system Atienza2006a. Finally, energy consumption has become a real issue in overall system design (both embedded and general-purpose) due to circuit reliability and packaging costs Vijaykrishnan2003. Thus, the optimization of the dynamic memory subsystem has three goals that cannot be seen independently: performance, memory usage and energy consumption. There cannot exist a memory allocator that delivers the best performance and least memory usage for all programs; however a custom memory allocator that works best for a particular program can be developed RiscoMartin2009b.

To reach high performance, for instance, programmers write their own ad hoc custom memory allocators as macros or monolithic functions in order to avoid function call overhead. This approach, implemented to improve application performance, is enshrined in the best practices of skilled computer programmers Meyers1995. Nonetheless, this kind of code is brittle and hard to maintain or reuse, and as the application evolves, it can be difficult to adapt the memory allocator as the application requirements vary. Moreover, writing these memory allocators is both error-prone and difficult. Indeed custom and efficient memory allocators are complicated pieces of software that require a substantial engineering effort.

Therefore, to design “optimal” memory allocators, flexible and efficient infrastructures for building custom and general-purpose memory allocators have been presented in the last decade Berger2001, Atienza2006a, Atienza2006. All the proposed methodologies are based on high-level programming where C++ templates and object-oriented programming techniques are used. It allows the software engineer to compose several general-purpose or custom memory allocator mechanisms. Thus, we define a DMM as a composition of one or more memory allocators. The aforementioned methodologies enable the implementation of custom DMMs from their basic parts (e.g., de/allocation strategies, order within pools, splitting, coalescing, etc.). In addition, Atienza2006a and Atienza2006 provide a way to evaluate the memory usage and energy consumption, but at system-level. However, all the mentioned approaches require the execution of the target application to evaluate every candidate custom DMM, which is a very time-consuming task, especially if the target application requires human input (like video games). In this regard, Kiem-Phong Vo presents a DMM that allows the definition of multiple memory regions with different disciplines Vo1996. However, this approach cannot be extended with new functionality and is limited to a small set of user-defined functions for memory de/allocation. Thus, new approaches to measure performance, memory usage and energy consumption are needed when designing a custom or general-purpose DMM.

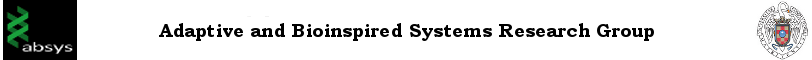

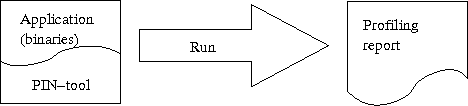

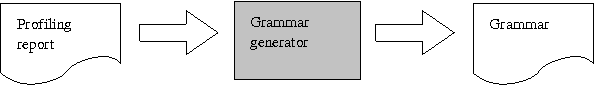

Recently, we have developed a framework based on grammatical evolution to automatically design optimized DMMs for a target application, minimizing memory usage and energy consumption and maximizing performance. Figs. 1, 2 and 3 depict the optimization process. First, as Fig. 1 shows, we run the application under study together with an instrumentation tool, which logs all the required information in an external file: identification of the object created/deleted, operation (allocation or deallocation) object size in bytes and memory address. Since all the exploration process is performed simulating the generated DMMs with the profiling report, this task must be done just once. In the following phase, as Fig. 2 shows, we automatically examine all the information contained in the profiling report, obtaining a specialized grammar for the target system. As a result, some incomplete rules in the original grammar, such as the different block sizes, are automatically defined according to the obtained profiling. To this end, we have developed a tool called “Grammar Generator”. The last phase is the optimization process. As Fig. 3 depicts, this phase consists of a GE algorithm that takes as input the grammar generated in the previous phase and the profiling report of the application. It also uses a DMM simulator, implemented to simulate the behavior of every DMM generated by the grammar when it is used in the application. Our GE algorithm is constantly generating different DMM implementations from the grammar file. When a DMM is generated (DMM(j) in Fig. 3), it is received by the DMM simulator. Next, the simulator emulates the behavior of the application, debugging every line in the profiling report. Such emulation does not de/allocate memory from the computer like the real application, but maintains useful information about how the structure of the selected DMM evolves in time. After the profiling report has been simulated, the DMM simulator returns back the fitness of the current DMM to the GE algorithm. The fitness is computed as a weighted sum of the performance, memory usage and energy consumed by the proposed DMM for the target embedded system and application under study.

DMMs optimization flow. In the first phase, we generate an initial profiling of the de/allocation pattern. In the second phase, we automatically analyze the profiling report to generate the final grammar. Finally, in the third phase an exploration of the design space of DMMs implementation is performed using GE.

An initial design of the GE algorithm was presented in RiscoMartin2009b and the DMM simulator in RiscoMartin2010a. However, the design space was designed in terms of previous DMM classifications performed by Berger Berger2001 and Atienza Atienza2006. Such classification implied a complex taxonomy of DMMs, as well as a huge search space. The execution time of both the simulator and the GE algorithm became prohibitives and a parallelization was needed RiscoMartin2010b. Furthermore, the profiling of the application was obtained overloading both malloc() and free() functions. Such mechanism required the modification and re-compilation of every target application. Currently, with the use of instrumentation code, the re-compilation is no longer needed, which is a great advantage. Thus, we have renewed both the GE algorithm and the simulator reaching a straight forward design that offer better results in affordable computational times (from minutes to several hours, depending on the target application). We present a flexible, stable and highly-configurable DMM design framework. By profiling of the target application, the DMM simulator can receive offline the dynamic behavior of the application and evaluate all the aforementioned metrics. As a result, the simulator is integrated into a search mechanism based on genetic programming in order to obtain optimum DMMs.

Downloads

- External tools

- Pintool - http://www.pintool.org

- Profiling reports (to be uploaded …)

- lyndsay

- boxed-sim

- cfrac

- gcbench

- espresso

- roboop